CI/CD pipeline: How to reduce 85% of Automated Software Testing Duration without Compromising Quality or Incurring Additional Costs

Introduction

Automatic tests integrated into a CI/CD pipeline offer immeasurable benefits, but to be truly effective, they must align with development cycle standards and deliver feedback as quickly as possible. Few things are more frustrating for developers than completing their implementation, only to be left waiting for hours to find out if their code passes the regression tests and the DevSecOps tests defined for the pipeline.

At PlaxidityX (formerly Argus Cyber Security), we are committed to optimizing development cycles both for our internal product development and for our customers.

Let me share a story about how we reduced nightly testing execution times from 2 hours and 44 minutes to just 21 minutes at one of our leading product development teams.

Step 1 – Analyzing our Current Situation

The first step towards improving testing execution was to understand the existing conditions and identify the root causes behind our testing duration times.

The team is responsible for testing a web-based application built as a wizard. It leads the user through a series of steps to configure different parameters and features in the system, and eventually provides a downloadable output that the user can use.

Our tests were divided into two main categories in order to form a secured coverage:

- Functional tests – testing different functions, logic, and elements of the application

- End-to-end (E2E) tests – executing different flows of the system, downloading the output, and verifying that the output includes what we expected.

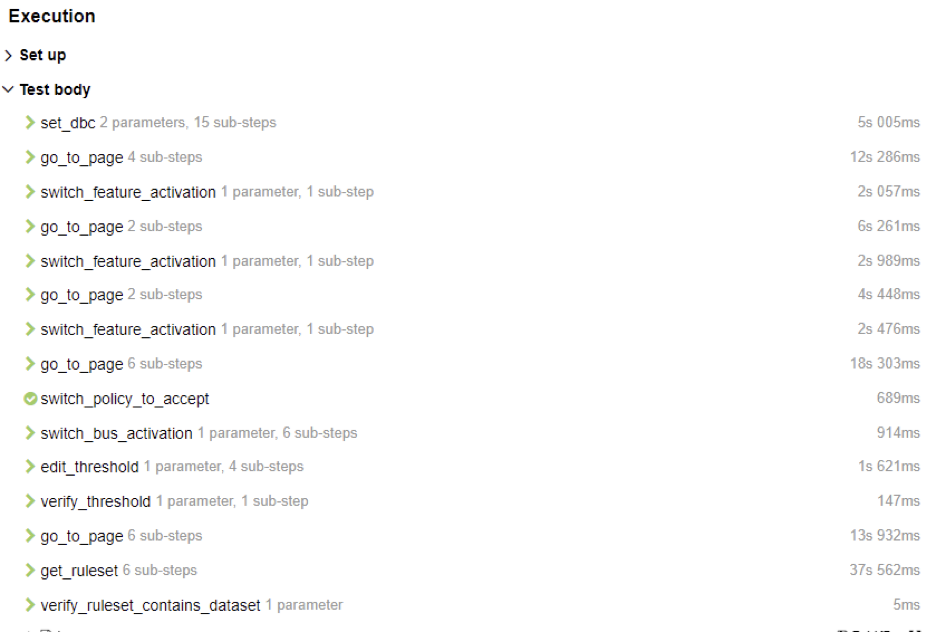

Diving deeper into our Allure reports and code, we uncovered interesting insights about the execution duration:

- Our tests run serially, which means the total execution time is the sum of all individual test cases

- Something is causing simple functions to take a long time

- Some functions take significantly more time than others

- We found redundancy in our test cases, steps within those test cases, and in our rerun mechanism

When analyzing what caused the steps to take such a long time, we realized it’s a combination of a few factors:

- The machine resources running the application were insufficient, causing the application to act slowly

- Test efficiency was suboptimal – we interacted with the UI instead of using the more efficient RESTful API (when the UI element is not the tested component)

Next, we tried to figure out why some steps took significantly more time than others. We saw, for example, the step of “go to page” was inefficiently coded. To get to the relevant page, it acted as a user and iterated over every screen on the way to its target, instead of acting as an automation infrastructure and simply “jumping” to the target screen.

Another issue we aimed to address was the login mechanism in our test cases. Typically, each test case opens a browser, navigates to the URL, and performs a login during its setup phase. Given that some of our test cases specifically challenge the validity of the login mechanism, it’s unnecessary for all test cases to go through this process. Instead, we can log in once and maintain the session for the rest of the test cases, allowing them to skip the login phase.

Note: Flaky tests (tests that generate inconsistent results) are a significant challenge that often leads to rerunning test cases and wasting valuable time. Our next post will dive into strategies for managing and mitigating flaky tests. Stay tuned!

Step 2 – Implementation of the Required Improvements

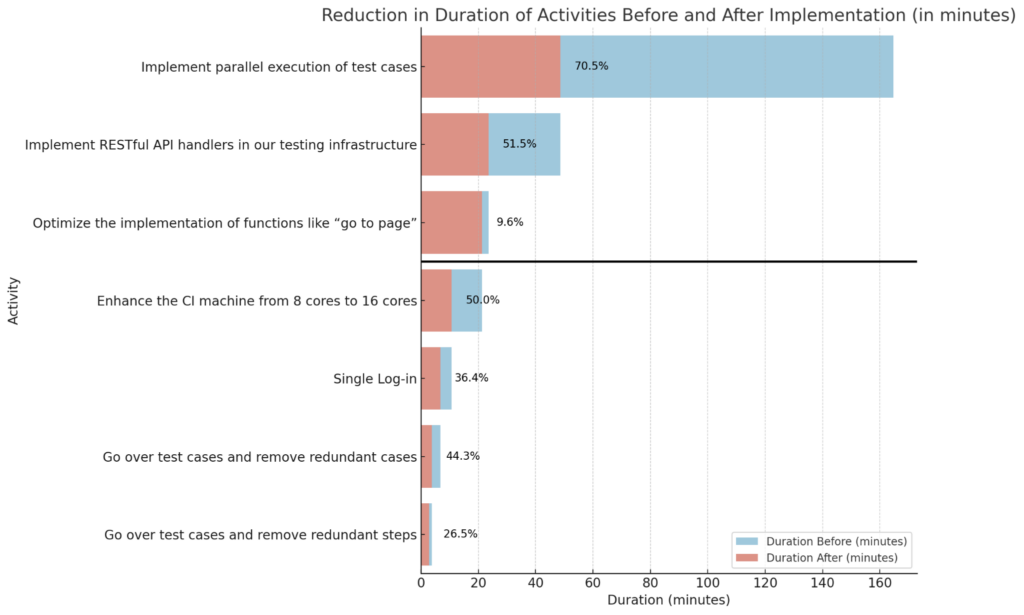

Based on the insights and information gathered in the first step, we defined the following action items:

- Implement parallel execution of test cases

- Enhance the resources of the machine running the Software under Test (SUT)

- Implement RESTful API handlers in our testing infrastructure

- Optimize the implementation of functions such as “go to page”

- Optimize the login phase

- Go over test cases and remove redundant cases

- Go over test cases and remove redundant steps

It was clear that the above action items are too much for one person, and should involve several team members. After assigning these items, we prioritized achieving “quick wins” to produce visible improvements early on and motivate the team towards our target of reducing feedback time to 10-15 minutes.

Parallel tests execution

Implementing parallel test execution is universally acknowledged as beneficial, yet it presents several challenges:

- The test runner must support parallel execution

- The system under test should support multiple simultaneous interactions, or we should set up multiple test environments

- Each test should be able to run independently – tests should set up their required state and clean up after themselves

- Test cases should not override shared resources used by other test cases, nor should they conflict with access to shared resources

Here’s how we dealt with these challenges:

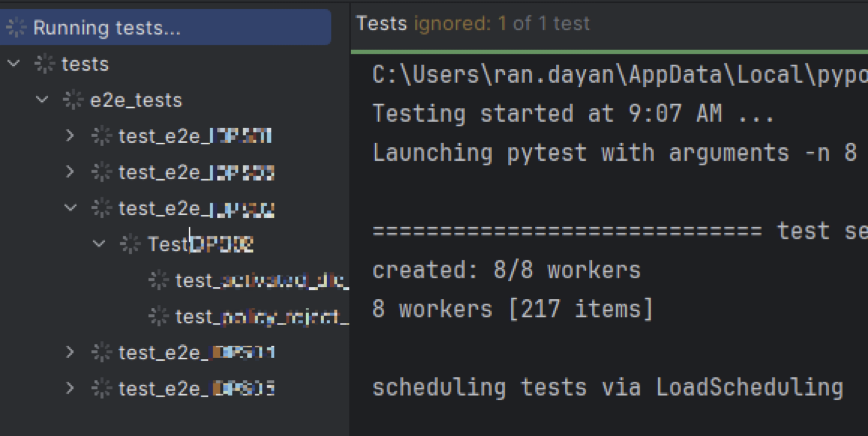

- We use pytest as our test runner, which has a brilliant plugin for parallel execution named “pyetst-xdist”

- The tested system does support multiple interactions, which causes the system to operate much slower. A shared effort with the DevOps team was required to optimize system resources

- Test independency was already implemented (a best practice for test automation in general)

- Managing output files was a notable challenge – tests running in parallel were overwriting each other’s files. We resolved this by assigning unique paths for each test output, incorporating the test name and the exact time of execution. UUIDs could also serve this purpose.

One important note is that the number of workers (independent processes) that can be triggered is equivalent to the number of CPUs the executing machine contains. The more CPUs, the more tests can get executed in parallel, and the shorter the execution time.

After two working days, we successfully integrated parallel execution using machines with 8 CPUs, shortening our nightly run from 2 hours and 45 minutes to 48 minutes!

We plan to increase the number of CPUs per machine in the future to gain further improvements.

Implement RESTful API handlers in our testing infrastructure

Tests typically consist of three phases: setup, execution, and teardown.

The operations that happen during the setup and teardown phases do not test the core functionality, so their implementation can be flexible as long as they deliver the necessary functionality. Accordingly, there’s no need to rely on heavy, slow UI interactions when lighter, faster RESTful API calls can achieve the same outcomes efficiently.

To use the RESTful API calls in our test cases, we implemented the following:

- Developing an integration layer – a layer within our testing framework that handles all interactions with the RESTful API. This layer includes functions for sending requests, handling responses, and managing authentication tokens.

- Implementing RESTful API-based functions – The functions we’re using as part of the tests (for ex. “create_new_project”) got an additional implementation using the RESTful API handlers. So now, depending on the given configuration, they can operate either using the RESTful API or using the UI interactions.

- Converting the test case setups and teardowns to only use RESTful API functions

This shift required extensive collaboration with our development team and led to significant reductions in test case execution times – from about 2 minutes per test to just 30 seconds – bringing our overall nightly execution time down to 23 minutes.

Additional improvements

In addition to the above, we’ve also implemented other activities from the list of action items, improving the efficiency of our testing infrastructure and increasing our confidence in the CI/CD process. Most importantly, these improvements were all achieved without compromising the quality of the tests. In other words, we now cover the same functionality and security in less time (see chart).

Currently, our test execution time is down to 21 minutes – our goal of 10-15 minutes is in sight and we’re not stopping till we get there! 💪

Key Takeaways for Your Organization

If you’ve read this far, you’re probably interested in learning how to improve the test automation at your organization.

Here are some takeaways from our experience that I hope you’ll consider.

Technical Tips and Resources

- Explore parallel test execution plugin for your testing infrastructure

- Python – pytest – pytest-xdist

- Python – unittest – nose2

- Java – JUnit 5 – junit-platform-parallel

- Java – TestNG – Built-in support

- JavaScript – Jest – Built-in support

- JavaScript – Mocha – ParallelBufferedRunner

- JavaScript – Cypress – Built-in support

- If you’re not yet utilizing RESTful API in your automation, start planning your RESTful API testing strategy and ask the relevant development manager to provide you with the required platform.

- If you’re using Playwright and you want to implement a one-time login mechanism, check out this blogpost. Other frameworks should have their own methods to achieve the same result.

Wrap-Up

While this post focuses on reducing CI execution times, this approach applies universally across all automation improvement projects, whether it’s implementing a performance testing suite, embedding a new DevSecOPS tool into the pipeline, or integrating AI to automate test case generation.

Here’s our basic plan for a successful QA automation improvement project:

- Analyze the current state and why it works the way it does

- Break down the action items into small manageable steps

- Plan action item implementation on a timeline with the relevant resource allocations

- Constantly monitor each activity

Lastly, don’t underestimate the importance of a quick win. Identify a step that can be easily completed and have a significant impact. In addition to boosting confidence within your team, it will also make it easier to justify your efforts to other stakeholders in your organization.

PlaxidityX the global leader in automotive cyber security. Interested to learn more?

Published: September 9th, 2024