AI-Powered Automotive Threat Detection: Turning Noise into Insight

TL;DR

As vehicles become increasingly software-defined with myriad cybersecurity sensors, traditional alert-driven threat detection approaches are no longer viable. This blog explores how the PlaxidityX onboard AI model and threat detection architecture transform raw in-vehicle events into contextual, attack-aware intelligence, reducing noise and enabling real-time classification of malicious behavior. By embedding AI-driven detection directly into the vehicle, this approach represents the future of scalable and resilient automotive cybersecurity.

Today’s automobile is no longer a machine of gears and engines – it’s a rolling data center powered by software, ECUs, and cybersecurity sensors. But this growing sophistication also carries a price – an expanding attack surface for potential hackers.

With each new vulnerability disclosed in blogs, research papers, and security advisories, OEMs and automotive vendors are turning to cybersecurity companies for solutions to monitor and detect anomalous behavior within vehicles.

Over time, this has led to a growing ecosystem of automotive cybersecurity components embedded in vehicles, each designed to detect potential threats. However, when detection systems are not fully tuned they can flood analysts with repetitive or low-context alerts. Many organizations route these to a centralized Security Operations Center (SOC), but even this reactive approach often leaves analysts overwhelmed by “noise.”

The missing link? Onboard, AI-powered threat detection that filters, correlates, and classifies events before they ever reach the SOC. By moving intelligence closer to the source, the vehicle itself becomes the first line of defense.

Introducing AI-Driven Automotive Threat Intelligence

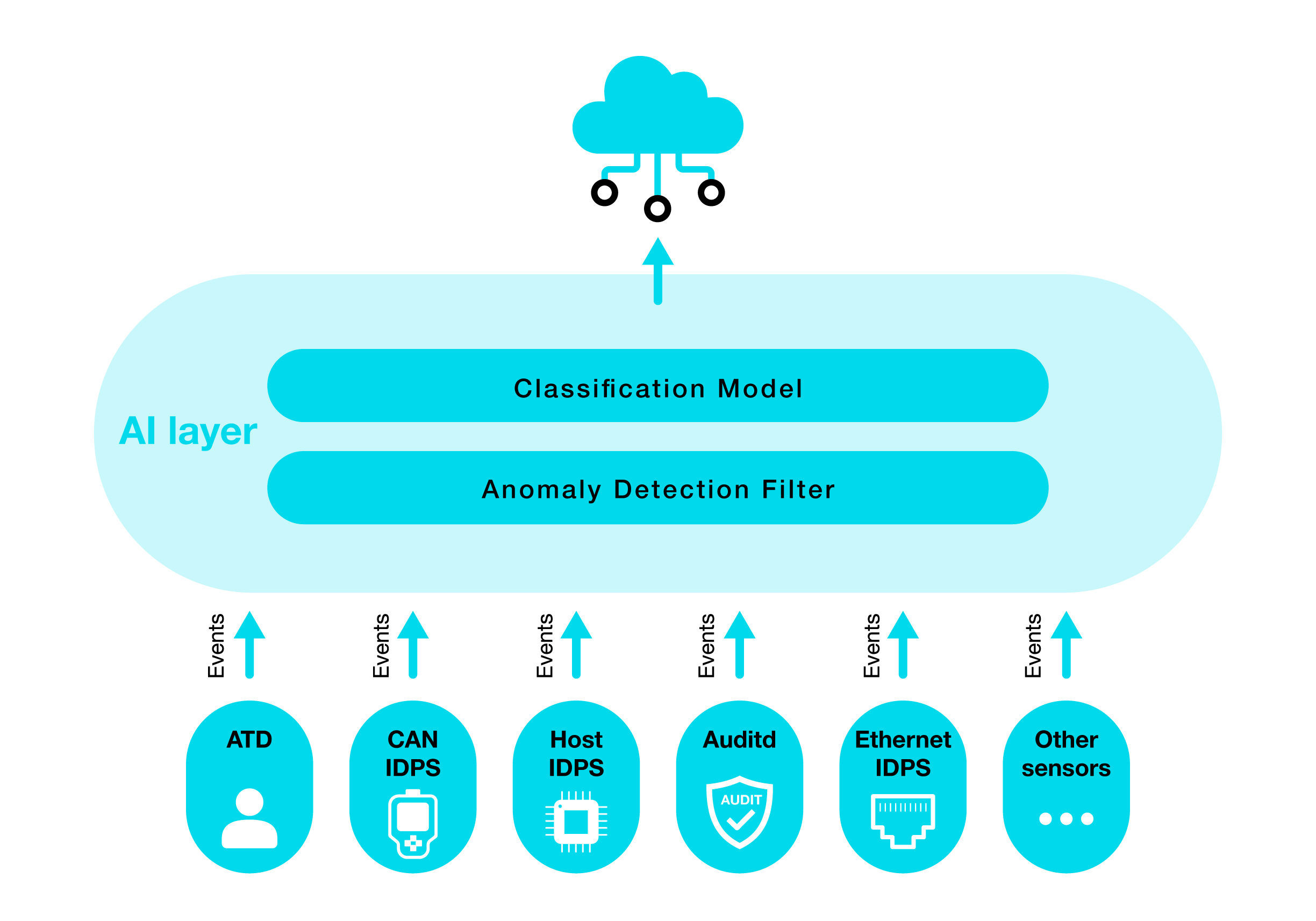

Imagine a vehicle that doesn’t just passively report anomalies, but actively understands them. Our solution integrates directly into the vehicle’s architecture, ingesting events from multiple onboard detection systems, applying machine learning models to reduce false positives, classifying potential attacks, and correlating them with known adversarial behaviors from the Automotive Threat Matrix (ATM).

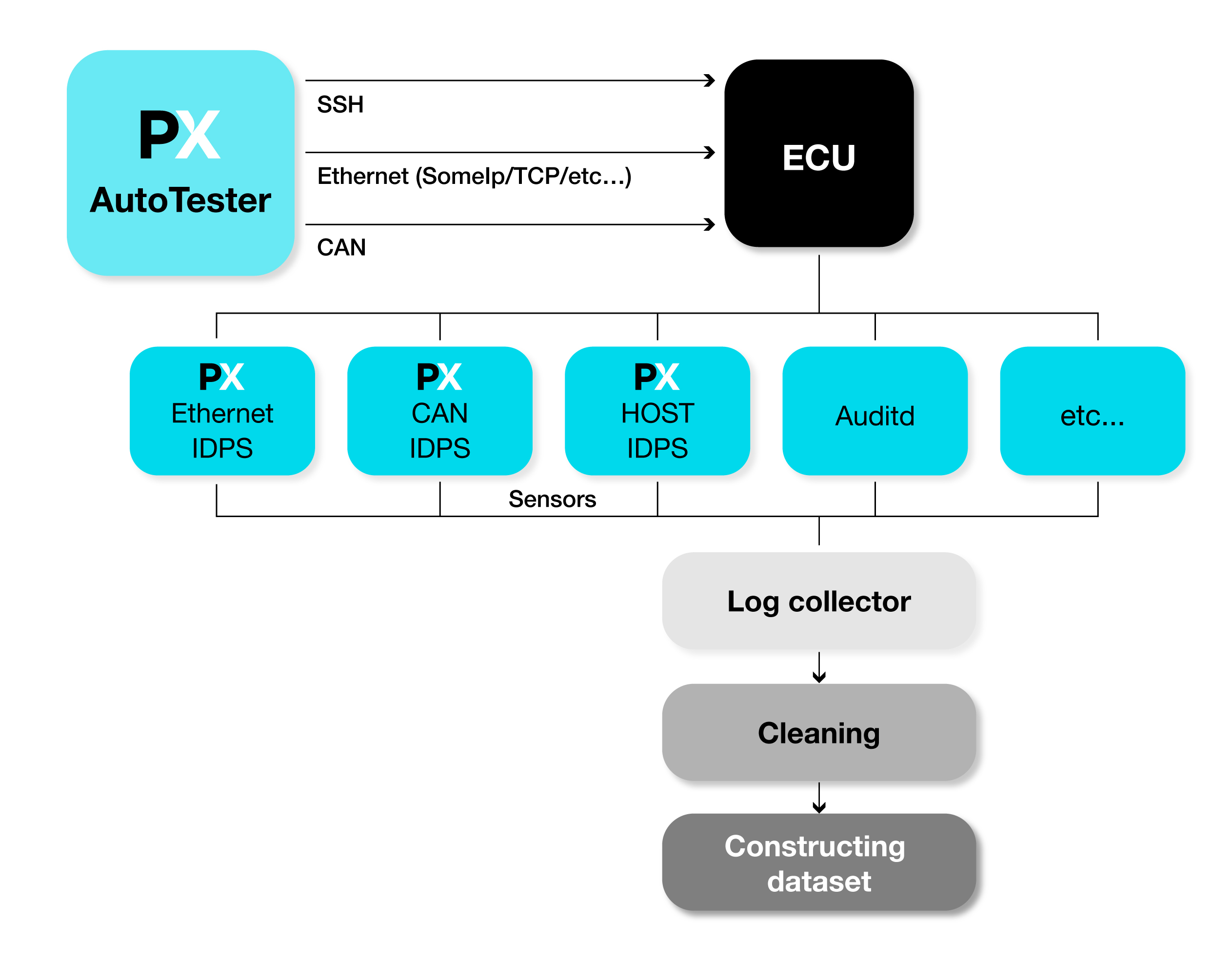

A high-level view of the architecture is depicted in the diagram below.

AI-Driven Automotive Threat Intelligence Architecture

Threat Assessment: Know the Battlefield

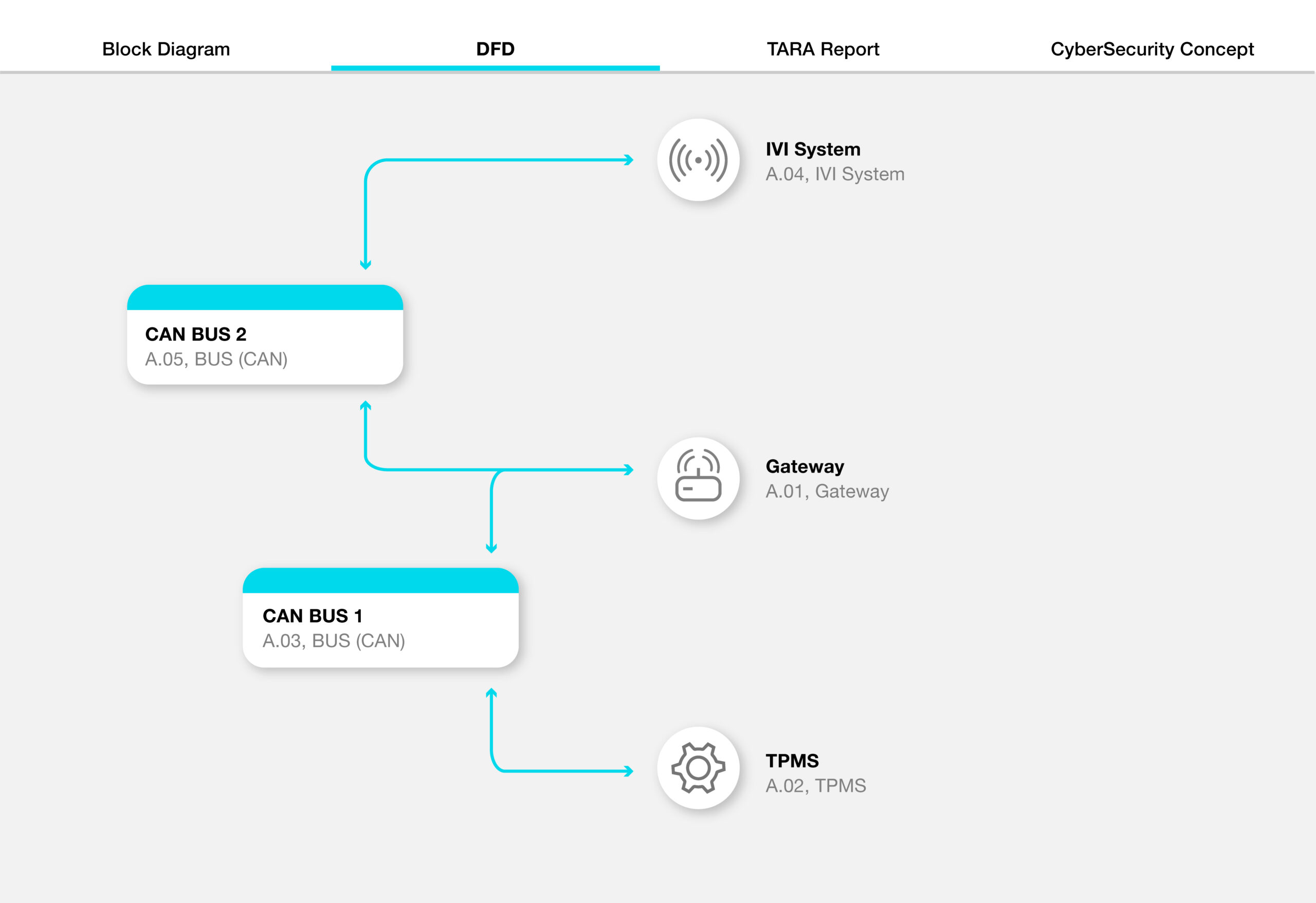

Before building effective detection, a comprehensive understanding of potential attack scenarios is critical. That’s where our Security AutoDesigner tool comes in.

This tool streamlines system-wide threat modeling. With just a few inputs, it generates a full TARA (Threat Analysis and Risk Assessment) report, visualizing vulnerabilities and attack paths across the vehicle.

Anomaly Detection: Learning the Vehicle’s Normal

Before running anomaly detection, we configured all IDSs to operate in high-sensitivity mode.

Once the threat landscape is defined, we proceed to detection – learning what ‘normal’ looks like for each vehicle.

Attack Classification: Context is King

An anomaly alone doesn’t tell the full story. To understand the attack vector, assess its potential impact, and derive mitigations, Security AutoDesigner correlates detected anomalies with the Auto-ISAC – Automotive Threat Matrix (ATM). This industry-standard framework enumerates adversary tactics and techniques based on real-world automotive cyber incidents.

Data Generation for Classification

Our supervised classification model relies on high-quality, labeled data:

- Automated Attack Simulation

Using PlaxidityX AutoTester, we simulate realistic attack scenarios, breaking CAN message cycles, sending suspicious UDS commands, and executing abnormal host operations. - Scenario Construction

These tests map directly to ATM techniques. For instance:- Sending suspicious UDS messages followed by system modification aligns with ATM Technique- “Abuse Standard Diagnostic Protocol for Persistence.”

- Simpler tests, like abnormal file discovery, map to ATM topic “File and Directory Discovery.”

- Dataset Curation

Only the generated event data is used, abstracting away proprietary sensor specifics and focusing on observed behaviors. Validation datasets remain strictly separate to prevent data leakage.

Test Architecture

- PX AutoTester executes all relevant tests on the vehicle network or ECU.

- Sensors generate the raw telemetry and event data during testing.

- Log Collector gathers logs from multiple sources and converts them into a unified format.

- Cleaning phase removes artifacts introduced by the test procedure itself (e.g., SSH sessions used to run commands may generate protocol-related events that could mislead analysis).

- Dataset construction formalizes the cleaned data into a consistent structure suitable for model training.

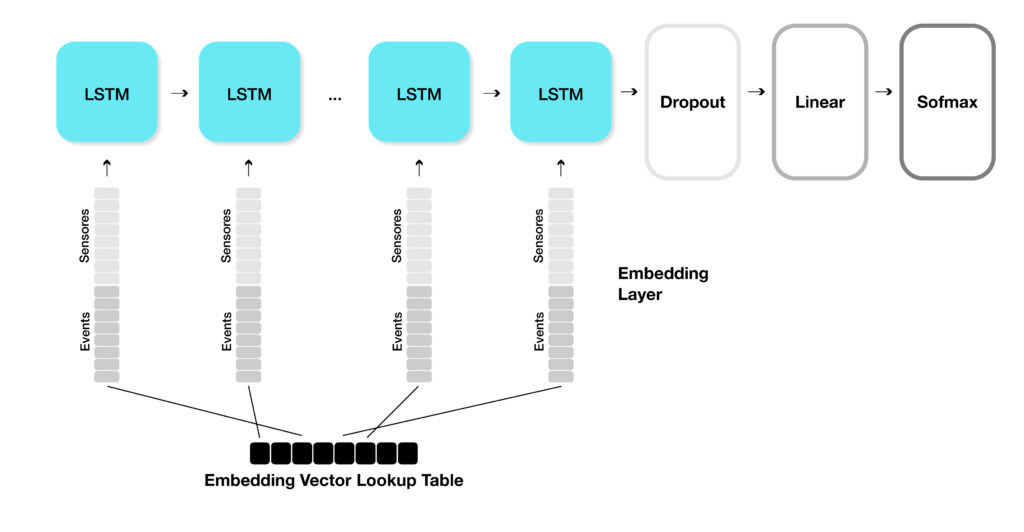

AI Model Architecture

Our model processes event sequences with contextual sensor information:

- Inputs: Event IDs and Sensor IDs, each as sequences of length 50.

- Embeddings: Separate embedding layers for events and sensors (dim = 36).

- Sequence Modeling: An LSTM captures temporal dependencies (1 LSTM layer).

- Latent Space Learning: Similar events cluster together, and can even cluster for previously unseen (zero-day) attacks.

- Classification: The final output is a softmax probability distribution over known ATM techniques. Currently, we are predicting five classes.

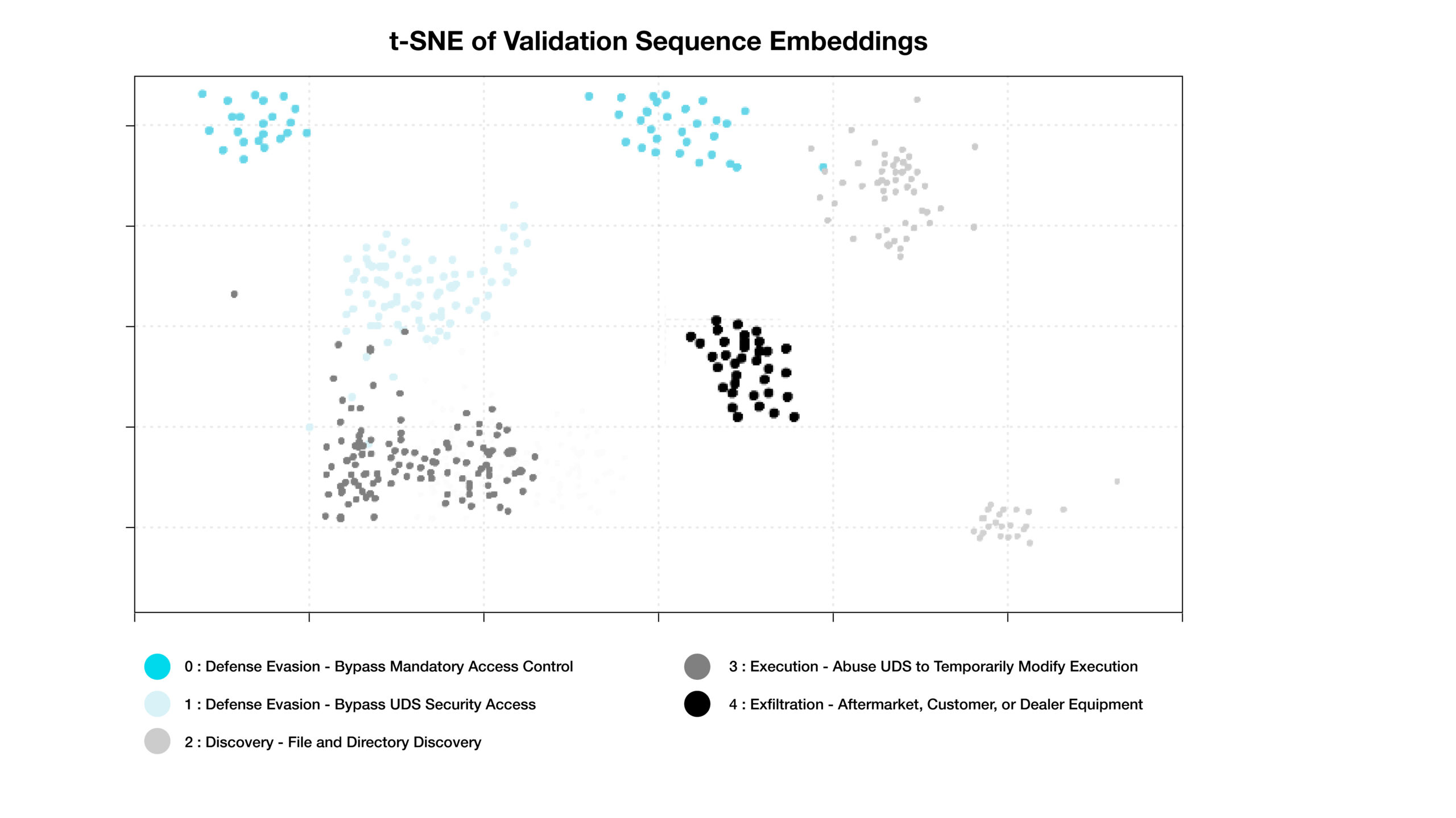

Evaluation & Visualization

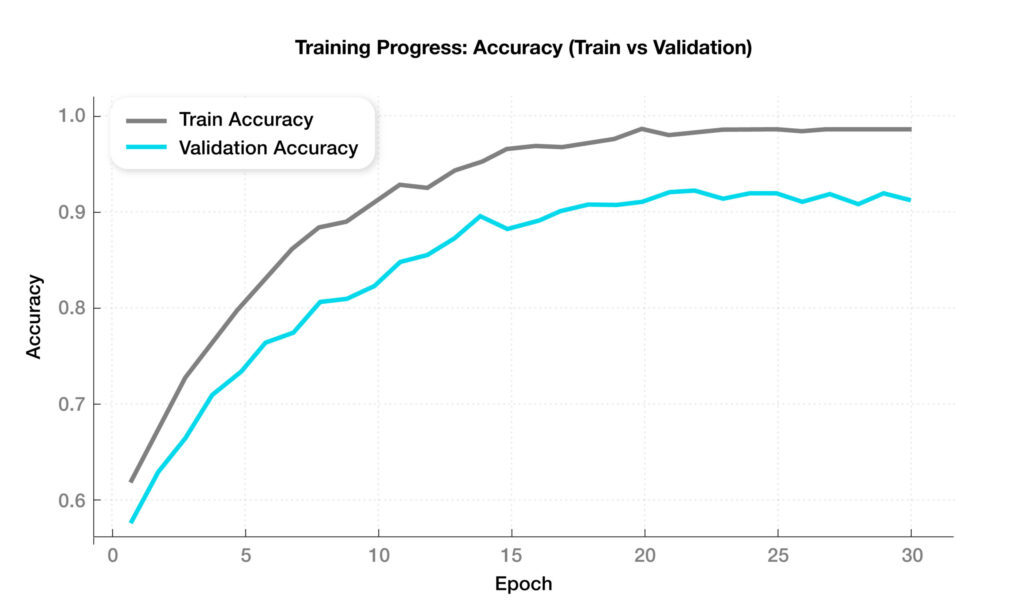

Once trained, the model’s performance is validated visually and statistically.

By projecting high-dimensional data into this visual map, we can quickly verify whether the model is correctly separating normal activity from suspicious patterns and spot previously unseen threats at a glance.The t-Distributed Stochastic Neighbor Embedding (t-SNE) plot shows how our model groups similar attack behaviors in a two-dimensional space.

- Each point represents a sequence of events classified by the system.

- Clusters represent events with similar behavioral patterns, even across different vehicles or attack scenarios.

- The colors represent the actual ground-truth classifications, not the model’s predictions

This visualization provides an intuitive way to gauge the model’s performance without diving into complex metrics.

Optimization is performed using cross-entropy.

- Accuracy: 0.92

- Macro Precision: 0.91

- Macro Recall: 0.90

- Macro F1-score: 0.90

Zero-Day Classification

The latent space allows for classifying novel attacks. Even if the model hasn’t seen a specific attack before, if it “walks like a duck and quacks like a duck,” the model recognizes the behavior as malicious.

Empowering the SOC: From Noise to Narrative

After filtering false positives, classifying behaviors, and correlating to known attack scenarios, we transmit enriched, contextualized data to the SOC. Instead of isolated alerts, SOC analysts receive a structured narrative:

- What happened

- How does it map to known attack vectors

- Confidence levels and recommended mitigation steps

This approach significantly reduces analyst fatigue, accelerates incident response, and enables proactive threat mitigation.

Instead of hundreds of isolated CAN alerts, the SOC receives a structured insight, such as: ‘Unauthorized diagnostic session detected – likely persistence attempt (ATM:T1543)’ with confidence and mitigation guidance.

Bottom Line: Smarter Cars, Smarter Defense

As vehicles become more connected and software-defined, relying solely on perimeter defenses or post-factum SOC analysis isn’t enough. Onboard AI offers a transformative approach, filtering noise, correlating events, and empowering security teams with actionable intelligence.

As AI capabilities evolve, vehicles won’t just defend themselves – they will learn, adapt, and share intelligence across entire fleets.

It’s time for the car itself to become an active participant in its own defense.

Published: January 27th, 2026