Going Behind The Scenes of Docker Networking

Have you ever wondered how does the Docker nat networking mechanism really work? How does it translate its magical capabilities into a practical reality? Well, a few weeks ago I found myself asking the same question, and today, I’m ready to share my insights with you.

Understanding Docker NAT networking is essential for efficient container management and network isolation. It enables containers to interact with external networks through a shared IP address, streamlining traffic and enhancing security. By effectively utilizing this networking approach, developers can optimize resource usage, simplify network configurations, and ensure robust connectivity in complex containerized setups.

Introduction

Let’s start from the beginning. Docker is a set of PaaS products that use OS kernel virtualization (a.k.a Containers), isolated and self-contained filesystems, software, configurations, and libraries. Additionally, as I quote from the official Docker documentation:

“Containers can communicate with each other through well-defined channels”

What is “well-defined”? What are the “channels” and how do they communicate in practice? In this detailed blog post, I will try to find the answers to these questions.

The Docker networking mechanism was built in a very similar way to that of the OS kernel. It uses much of the same concepts, benefits and capabilities, such as abstractions, but it extends and expands them to fit its own needs.

Definitions

Before we dive deeper into the technical analysis, let’s make sure that you are familiar with some important definitions:

- Layer 2: is the data link layer, a protocol layer that transfers frames between nodes in a typical wide area network. An example protocol in this layer would be ARP, which discovers MAC addresses with its IP address.

- Layer 3: is the network layer, a routing layer that transfers packets between local area network hosts. Example protocols in this layer would be IP and ICMP (ping command).

- NAT: Network Address Translation, provides a simple mapping from one IP address (or subnet) to another. Typically, the NAT gives the kernel the ability to provide “virtual” large private networks to connect to the Internet using a single public IP address. To achieve the above, the NAT maintains a set of rules (generally speaking, ports masquerade and translation).

- Bridge: the Network Bridge is a device (can also be a virtual one) that creates a communication surface which connects two or more interfaces to a single flat broadcast network. The Bridge uses a table, forwarding information base, maintains a forwarding pairs entries (for example, record might look like MAC_1 → IF_1).

- Network Namespace: by namespacing, the kernel is able to logically separate its processes to multiple different network “areas”. Each network looks like a “standalone” network area, with its own stack, Ethernet devices, routes and firewall rules. By default, the kernel provides a “default” namespace in its bootstrap (if not stating otherwise, every process will be spawned/forked in the default namespace). Every child process inherits its namespace from its ancestors.

- Veth: Virtual Ethernet device is a virtual device that acts as a tunnel between network namespaces. These devices create interconnected peering between the two connected links and pass direct traffic between them. This concept mainly belongs to UNIX OS. On Windows OS it works differently.

Docker Networking

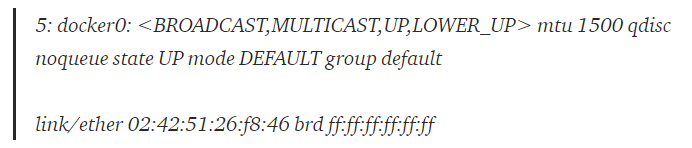

Now that we are familiar with the basic terms, let’s start with our first observation. If you installed a Docker daemon and ran the following command

ip link show

You might notice that something has changed:

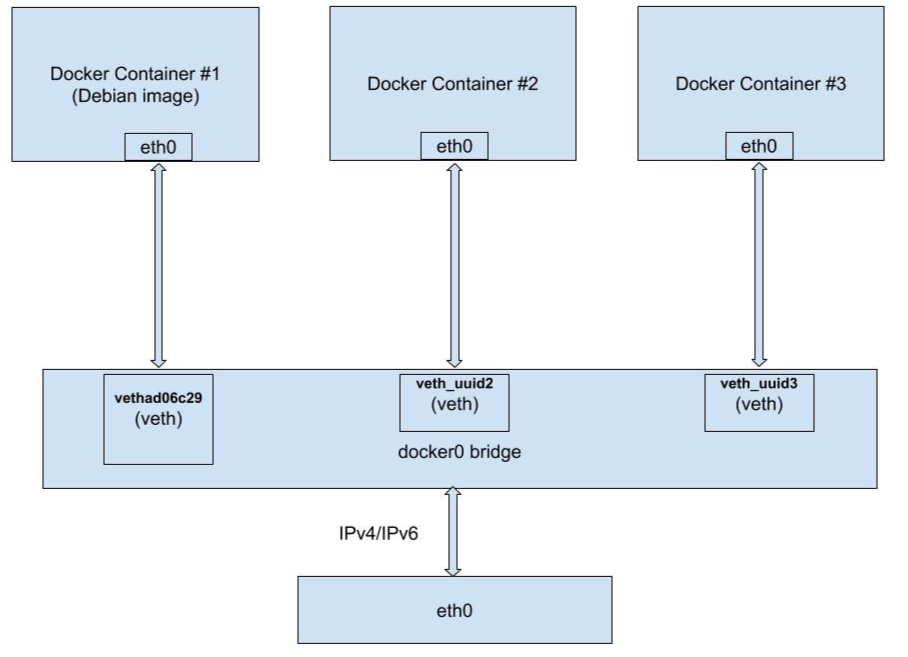

But what exactly changed? What is this MAC address? (check here that it’s not a physical NIC interface). docker0 is a virtual bridge interface created by Docker. It randomly chooses an address and subnet from a private defined range. All the Docker containers are connected to this bridge and use the NAT rules created by docker to communicate with the outside world. Remember the “channels” I mentioned above? Well, these channels are actually a veth “tunnel” (a bi-directional connection between each container namespace and the docker0 bridge).

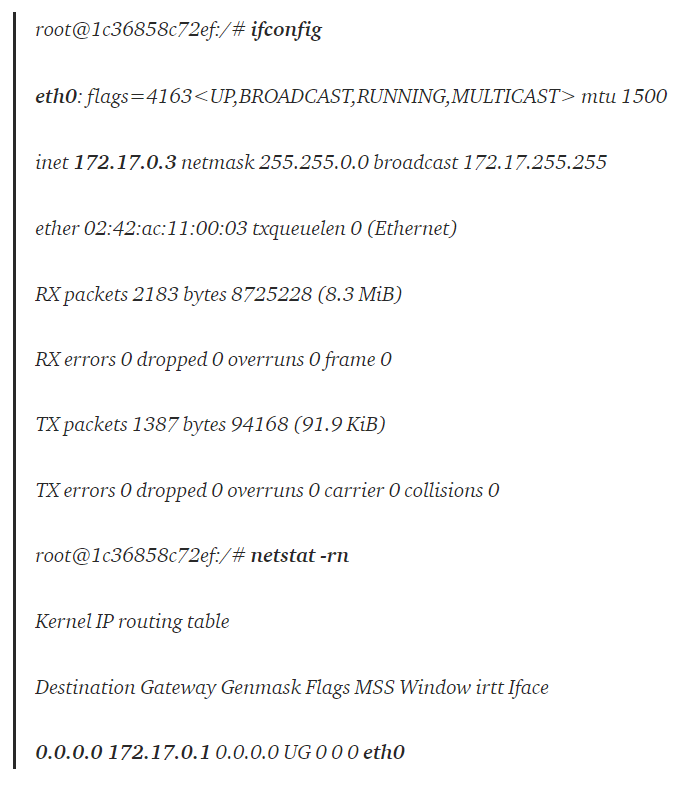

For example, let’s analyze a simple Debian container:

docker run — rm -it debian:stable-slim

When drilling down its network setup and configuration we learn that the container (as any other Docker container) is an isolated virtual OS, so it maintains a unique namespace:

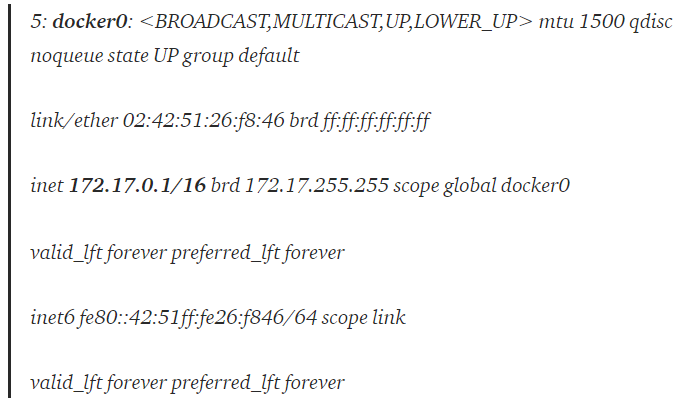

I highlighted the crucial points above: the docker holds layer 2 interface named eth0 and routes any IP address to the default gateway with IP 172.17.0.1, which is, unsurprisingly, our docker0 interface:

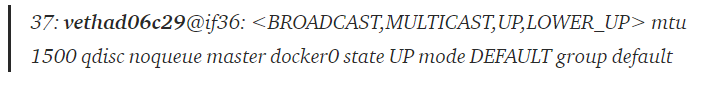

A respective veth (identifier vethad06c29) created in order to transfer traffic between the container and the bridge:

ip link show | grep veth

Moreover, as you can see, docker0 manages the LAN subnet 172.17.X.X with default gateway 172.17.0.1 (in our container above, its IP was 172.17.0.3 which satisfies the condition aforementioned). To conclude, we now understand how multiple containers can link each other via the Docker Bridge and via veth tunneling:

Docker and Kernel Networking

How can containers transfer data to the kernel, and from there, to the outside world? Let’s take a closer look at the process as we cover two network manipulation techniques that Docker uses to achieve its external communication capability:

- Port Forwarding — forwards traffic on a specific port from a container to the kernel.

- Host Networking — disables the network namespace stack isolation from the Docker host.

The examples below will demonstrate the communication pipeline for each use case.

Port Forwarding

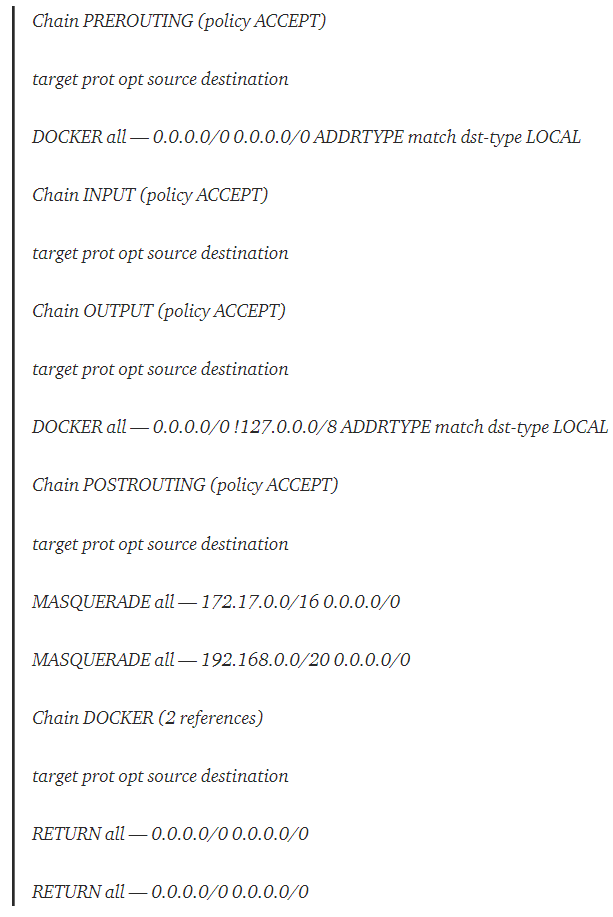

Let’s review the current status of the kernel NAT rules. We’ll only filter the table which is consulted when a new connection has been established.

sudo iptables -t nat -L -n

We can see 5 rule sections (a.k.a chains): PREROUTING, INPUT, OUTPUT, POSTROUTING and DOCKER. We will only focus on PREROUTING, POSTROUTING and DOCKER:

- PREROUTING — rules altering packets before they come into the network stack (immediately after being received by an interface).

- POSTROUTING — rules altering packets before they go out from the network stack (right before leaving an interface).

- DOCKER — rules altering packets before they enter/leave the Docker bridge interface.

The PREROUTING rules lists any packet targeting the DOCKER rules section, before they enter the interface network stack. Currently, the only rule is RETURN (returns back to the caller). The POSTROUTING describes how each source IP in the Docker subnet (e.g. 172.17.X.X) will be targeted as MASQUERADE when sent to any destination IP, which overrides the source IP with the interface IP.

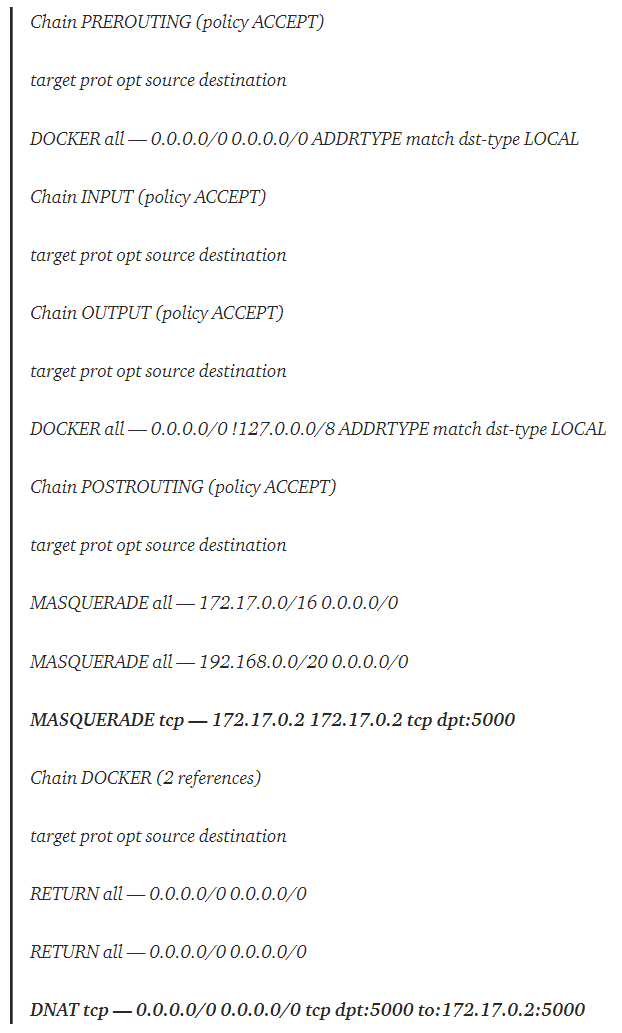

Now, let’s create a lightweight Python http webserver container listening to port 5000 and forwarding it:

docker run -p 5000:5000 –rm -it python:3.7-slim python3 -m http.server 5000 — bind=0.0.0.0

And again, let’s review the NAT rules:

sudo iptables -t nat -L -n

Published: March 24th, 2025